Graph Neural Operator for PDEs

The blog takes about 10 minutes to read. It introduces our recent work that uses graph neural networks to learn mappings between function spaces and solve partial differential equations. You can also check out the paper and code for more formal derivations.

Introduction

Scientific computations are expensive. It could take days and months for numerical solvers to simulate fluid dynamics and many-body motions. Because to achieve good accuracy, the numerical solvers need to discretize the space and time into very fine grids and solve a great number of equations on the grids. Recently, people are developing data-driven methods based on machine learning techniques such as deep learning. Instead of directly solving the problems, data-driven solvers learn from the data of the problems and solutions. When querying new problems, data-driven solvers can directly give predictions based on the data. Since they don’t need to discretize the space into very small pieces and solve all these equations, these data-driven solvers are usually much faster compared to traditional numerical solvers,

However, data-driven solvers are subject to the quality of the data given. If the training data is not good enough, they can’t make good predictions. In scientific computing, usually, the training data are generated by the traditional numerical solvers. And to generate good enough data, it still takes days and months for these numerical solvers. Sometime, data are observed from experiments and there are just no good training data. Especially, people consider neural networks as interpolators which may not be able to extrapolate. It is unclear whether neural networks can generalize to unseen data. So if the training data are of one resolution, the learned solvers can only solve the problem in this specific resolution. In general, generalization is a crucial problem in machine learning. It becomes a trade-off: these machine learning based methods make evaluation easier, but the training process could be even more painful.

To dealing with this problem, we purpose operator learning. By encoding certain structures, we let the neural network learn the mapping of functions and generalize among different resolutions. As a result, we can first use a numerical method generated some less-accurate, low-resolution data, but the learned solver is still able to give reasonable, high-resolution predictions. In some sense, both training and evaluation can be pain-free.

Operator Learning

In mathematics, operators are usually referring to the mappings between function spaces. You most likely have already encountered some operators. For example, the differentiation and integration are operators. When we take the derivative or do an indefinite integration of a function, we will get another function. In other words, the differentiation and integration are mappings from function space to function space.

In scientific computing, usually the problem is to solve some form of differential equations (PDEs). Consider a general differential equation of the form:

where and are some functions on the physical domain, and is some differential operator that maps the function to the function . Usually, and are given. The task is to solve for . That is, we want to learn an operator like the inverse of that maps the function to the function . So the problem of PDE is indeed an operator learning problem.

The classical development of neural networks has been primarily for mappings between a finite-dimensional Euclidean space and a set of classes (e.g. an image vector to a label), or between two finite-dimensional Euclidean spaces (e.g. an image vector to another image vector). However, many problems in physics and math involve learning the mapping between function spaces, which poses a limitation on the classical neural network based methods. Besides all these problem governed by differential equations, we are learning operators in many common machine learning setting. For a bold example, images should be considered as functions of light defined on a continuous region, instead of as pixel vectors.

In this work, we aim to generalize neural networks so that they can learn operators, the mappings between infinite-dimensional spaces, with a special focus on PDEs.

Limitation of Fixed Discretization

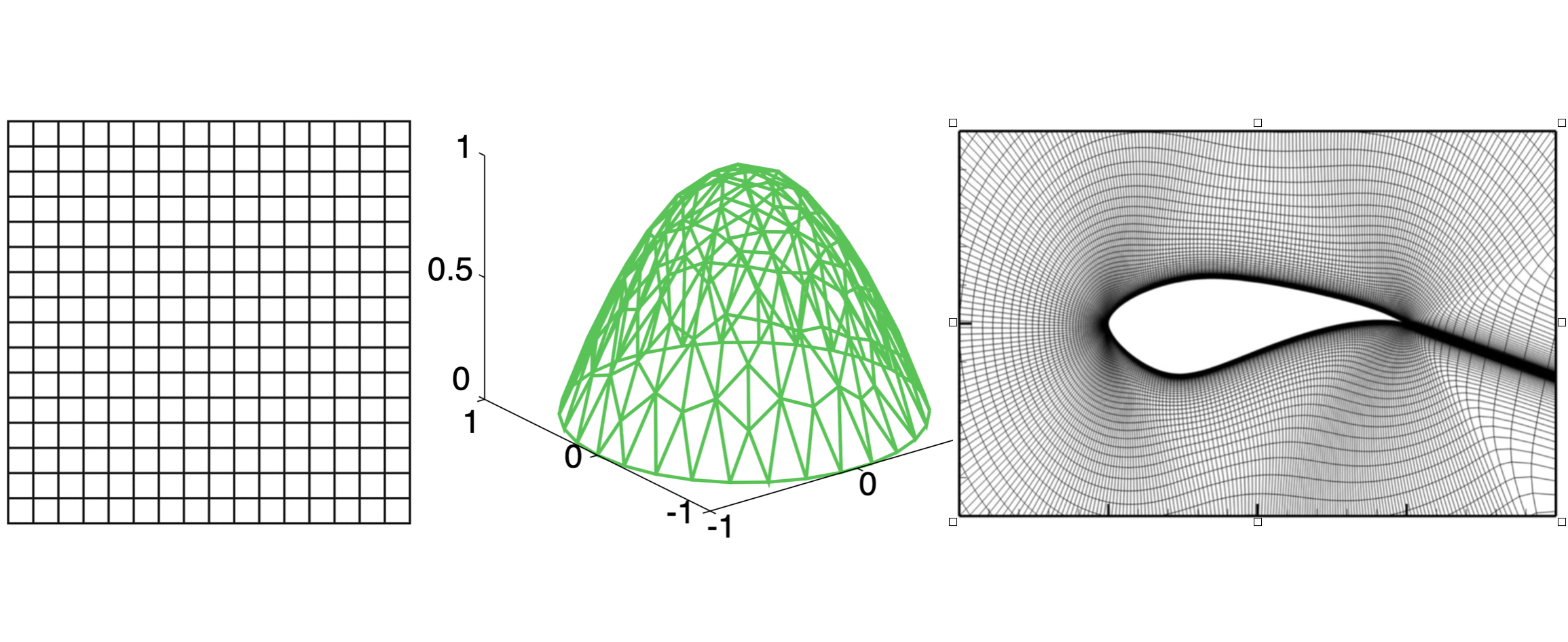

PDEs are, unfortunately, hard. Instead of learning the operator, people usually discretize the physical domain and cast the problem in finite-dimensional Euclidean space. Indeed, hundred years of effort has been made to develop numerical solvers such as the finite element method and finite difference method.

Just like how we store images by pixels in .PNG and .JPG formats, we need to discretize the domain of PDEs into some grid and solve the equation on the grid. It really makes the thing easier. These traditional numerical solvers are awesome, but they have some drawbacks:

- The error scales steeply with the resolution. We need a high resolution to get good approximations.

- The computation and storage steeply scale with the resolution (i.e. the size of the grid).

- When the equation is solved on one discretization, we cannot change the discretization anymore.

.PNG and .JPG formats are good. But sometimes maybe we want to save the images as vector images in .EPS or .SVG formats, so that it can be used and displayed in any context. And for some images, the vector image format is more convenient and efficient. Similarly, we want to find the continuous version for PDEs, an operator that is invariant of discretization.

Furthermore, mathematically speaking, such continuous, discretization-invariant format is in some sense, closer to the real, analytic solution. It has an important mathematical meaning. Bear the motivation in mind. Let’s develop a rigorous formulation.

Problem Setting

Let’s be more concrete. Consider the standard second order elliptic PDE

for some bounded, open domain and a fixed source function . This equation is prototypical of PDEs arising in numerous applications including hydrology and elasticity. For a given function , the equation has a unique weak solution and therefore we can define the solution operator as the map from function to function .

Our goal is to learn a operator approximating ,

by using a finite collection of observations of input-output pairs

, where each and are functions on .

In practice, the training data is solved numerically or observed in experiments.

In other words, functions and come with discretization.

Let be a -point discretization of the domain

and assume we have observations , for a finite

collection of input-output pairs indexed by .

We will show how to learn a discretization-invariant mapping based on discretized data.

Kernel Formulation

For a general PDE of the form:

Under fairly general conditions on , we may define the Green’s function as the unique solution to the problem

where is the delta measure on centered at . Note that will depend on the coefficient thus we will henceforth denote it as . Then operator can be written as an integral operator of green function:

Generally the Green’s function is continuous at points , for example, when is uniformly elliptic. Hence it is natural to model the kernel via a neural network . Just as the Green function, the kernel network takes input . Since the kernel depends on , we let also take input .

As an Iterative Solver

In our setting, is an unknown but fixed function. Instead of doing the kernel convolution with , we will formulate it as an iterative solver that approximated by , where is the time step.

The algorithm starts from an initialization , for which we use . At each time step , it updates by an kernel convolution of .

It works like an implicit iteration. At each iteration the algorithm solves an equation of and by the kernel integral. will be output as the final prediction.

To further take the advantage of neural networks, we will lift to a high dimensional representation , with the dimension of the hidden representation.

The overall algorithmic framework follow:

where and are two feed-forward neural networks that lifts the initialization to hidden representation and projects the representation back to the solution , respective. is an activation function such as ReLU. the additional term is a linear transformation that acts on $v$. Notice, since the kernel integration happens in the high dimensional representation, the output of is not a scalar, but a linear transformation .

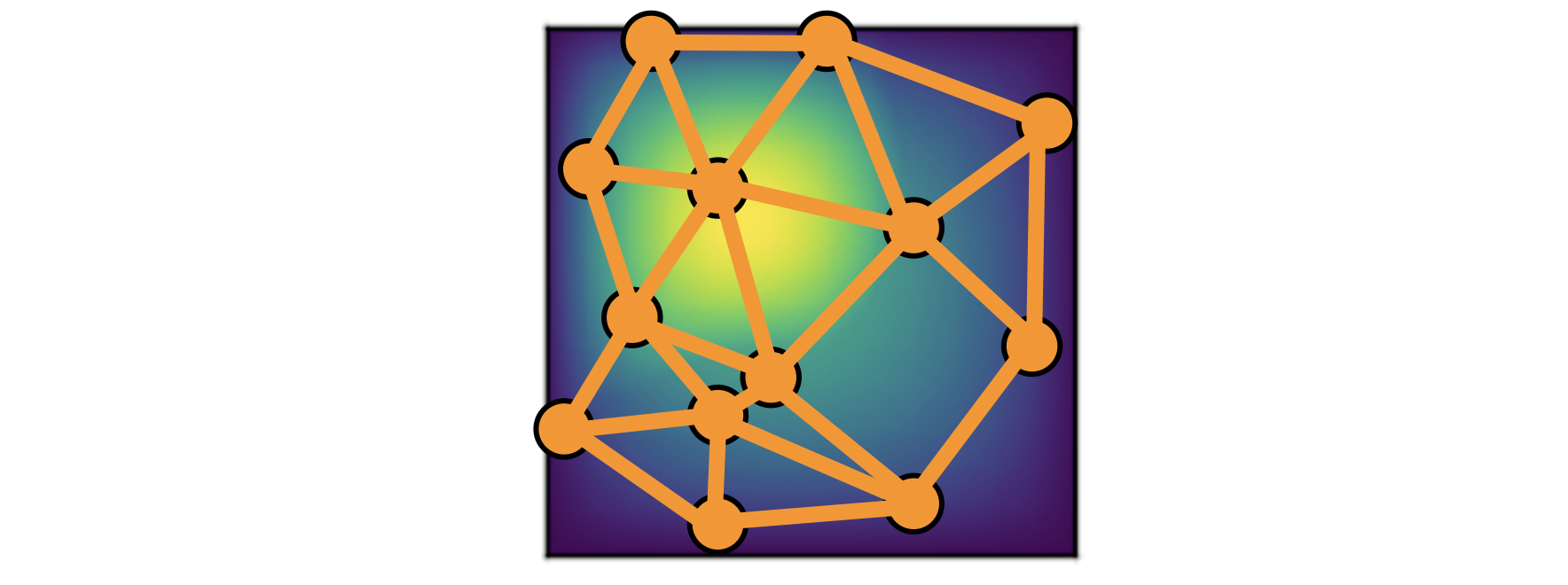

Graph Neural Networks

To do the integration, we again need some discretization.

Assuming a uniform distribution of ,

the integral can be approximated by a sum:

Observation: the kernel integral is equivalent to the message passing on graphs

If you are similar with graph neural network, you may have already realized this formulation is the same as the aggregation of messages in graph network. Message passing graph networks comprise a standard architecture employing edge features (gilmer et al, 2017).

If we properly construct graphs on the spatial domain of the PDE, the kernel integration can be viewed as an aggregation of messages. Given node features , edge features , and a graph , the message passing neural network with averaging aggregation is

where , is the neighborhood of according to the graph, is a neural network taking as input edge features and as output a matrix in . Relating to our kernel formulation, .

Nystrom Approximation

Ideally, to use all the information available, we should construct nodes in the graph for all the points in the discretization , which will create edges. It is quite expensive. Thankfully, we don’t need all the points to get an accurate approximation. For each graph, the error of Monte Carlo approximation of the kernel integral scales with , where is the number of nodes sampled.

Since we will sample graphs in total for all training examples , the overall error of the kernel is much smaller than . In practice, sampling nodes is sufficient for points.

It is possible to further improve the approximation using more sophisticated Nystrom Approximation methods. For example, we can estimate the importance of each points, and add more nodes to the difficult and singularity areas in the PDEs.

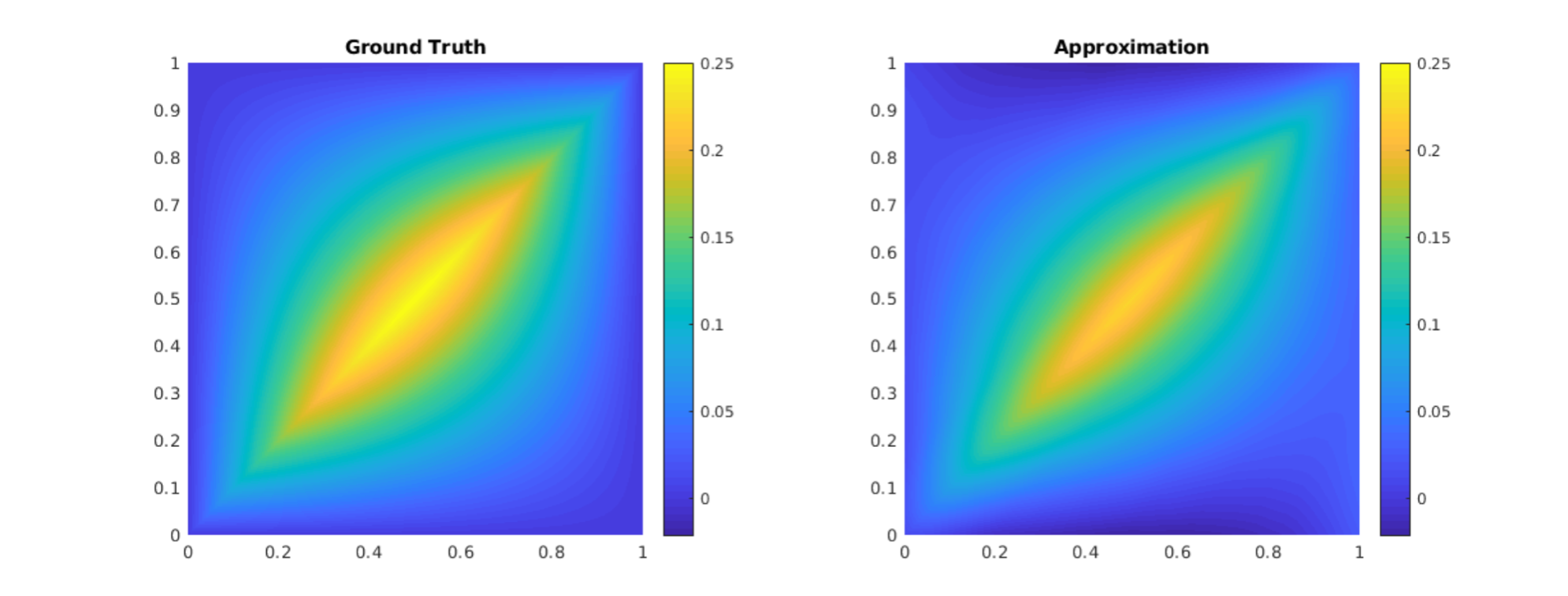

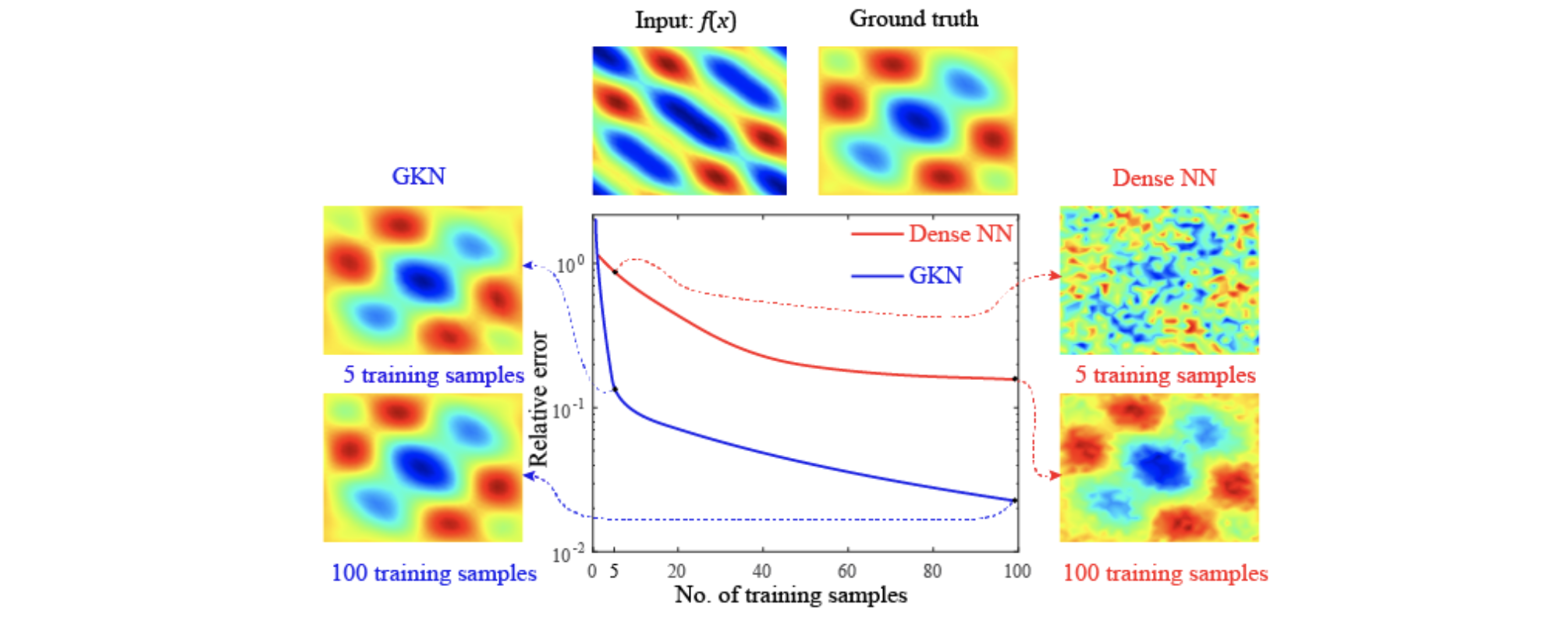

Experiments: Poisson Equations

First let’s do a sanity check. Consider a simple poisson equation:

We set and , using one iteration of the graph kernel network to learn the operator .

poisson equation

As shown in the figure above, we compare the true analytic Green function (left) with the learned kernel (right). The learned kernel is almost the same as the true kernel, which means are neural network formulation does match the Green function expression.

poisson equation

By assuming the kernel structure, graph kernel networks need only a few training examples to learn the shape of solution . As shown in the figure above, the graph kernel network can roughly learn with training pairs, which a feedforward neural network need at least training examples.

Experiments: generalization of resolution

For the large scale experiments, we use Darcy equation of the form

and learn the operator .

To show the generalization property, we train the graph kernel network with nodes sampled from the resolution and test on another resolution .

| Resolutions | s’=61 | s’=121 | s’=241 |

|---|---|---|---|

| s=16 | 0.0717 | 0.0768 | 0.0724 |

| s=31 | 0.0726 | 0.0710 | 0.0722 |

| s=61 | 0.0687 | 0.0728 | 0.0723 |

| s=121 | 0.0687 | 0.0664 | 0.0685 |

| s=241 | 0.0649 | 0.0658 | 0.0649 |

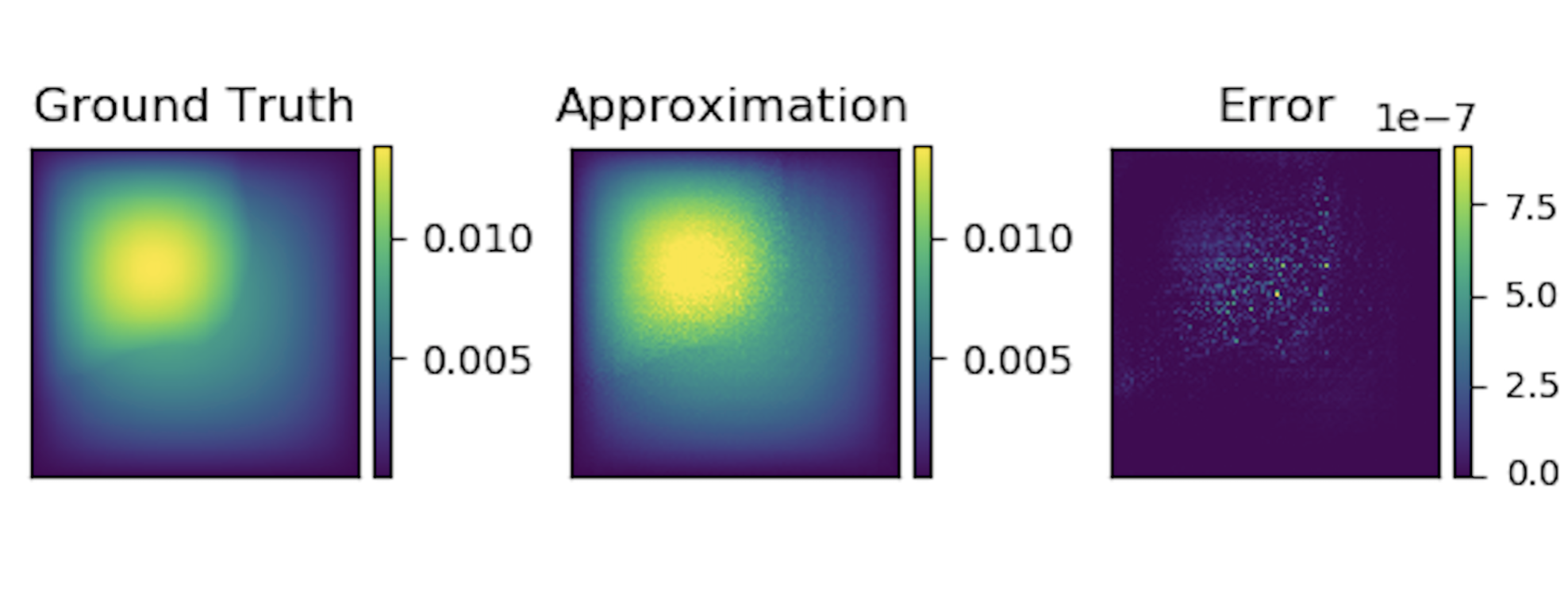

As shown in the table above for each row, the test errors on different resolutions are about the same, which means the graph kernel network can also generalize in the semi-supervised setting. An figure for is following (where error is absolute squared error):

Experiments: Comparision of Benchmarks

Finally, we compare graph network with different benchmarks on Darcy equation in the fixed discretization setting where the resolution .

| Networks | s=85 | s’=141 | s’=211 | s’=421 |

|---|---|---|---|---|

| NN | 0.1716 | 0.1716 | 0.1716 | 0.1716 |

| FCN | 0.0253 | 0.0493 | 0.0727 | 0.1097 |

| PCA+NN | 0.0299 | 0.0298 | 0.0298 | 0.0299 |

| RBM | 0.0244 | 0.0251 | 0.0255 | 0.0259 |

| GKN | 0.0346 | 0.0332 | 0.0342 | 0.0369 |

-

NN is a simple point-wise feedforward neural network. It is mesh-free, but performs badly due to lack of neighbor information.

-

FCN is the state of the art neural network method based on Fully Convolution Network[ZhuandZabaras,2018]. It has a dominating performance for small grids . But fully convolution networks are mesh-dependent and therefore their error grows when moving to a larger grid. Besides the scaling of computation, one needs to work hard on parameter-tuning for each specific resolution.

-

PCA+NN is an instantiation of the methodology proposed in [Bhattacharya et al., 2020]: using PCA as an auto-encoder on both the input and output data and interpolating the latent spaces with a neural network. The method provably obtains mesh-independent error and can learn purely from data, however the solution can only be evaluated on the same mesh as the training data.

-

RBM is the classical Reduced Basis Method (using a PCA basis), which is widely used in applications and provably obtains mesh-independent error [DeVore, 2014]. It has the best performance but the solutions can only be evaluated on the same mesh as the training data and one needs knowledge of the PDE to employ it.

-

GKN stands for our graph kernel network. It enjoys competitive performance against all other methods while being able to generalize to different mesh geometries.

Conclusion

In the work we purposed to use graph networks for operator learning in PDE problems. By varying the underlying graph and discretization, the learned kernel is invariant of the discretization. Experiments confirm the graph kernel networks are able to generalize among different discretization. And in the fixed discretization setting, the graph kernel networks also have good performances compared to several benchmark.